Camera Selection – How Can I Find the Right Camera for My Image Processing System?

Choosing the right vision system can be overwhelming. The large number of camera models, technologies, and functions often makes the decision difficult. We are here tohelp you keep track of everything and find the right camera for your application.

Fundamentals of Image Processing Systems

Questions that we answer in detail in the White Paper

Why are requirements definitions important?

What do I need to know about the camera's resolution and sensor? Color or monochrome camera? Which camera functions are important?

How important are the scale and imaging performance of the camera and what role does image quality play?

Step by step to optimal image processing

Get started with a well-founded analysis. Ask yourself two questions:

What do I need to see with the camera?

What characteristics are necessary for my camera to deliver precisely that?

The answers to these questions set the direction for choosing the right camera.

Decision No. 1: area scan camera, line scan camera, or 3D camera?

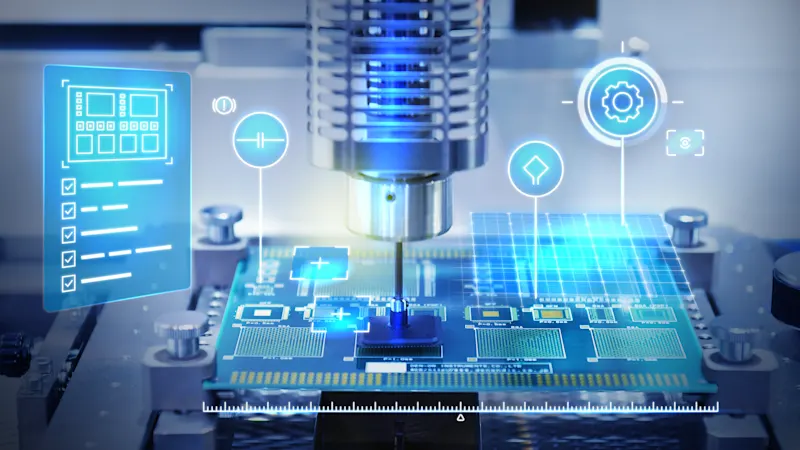

Choosing the right industrial camera or machine vision camera depends on your application and specific requirements. Whether it is an area camera, line scan camera, or 3D camera – each technology offers unique advantages and is optimized for different application areas. They differ primarily in the way they capture images – a crucial factor for your machine vision application.

Area scan cameras

Area scan cameras are equipped with a rectangular sensor, consisting of numerous rows of pixels, all of which are exposed at exactly the same time. All image data is therefore recorded at the same time, and processed at the same time.

Area scan cameras are typically used in a variety of industrial applications, in medical andlife science, in traffic and transportation, or in security and surveillance.

Line scan cameras

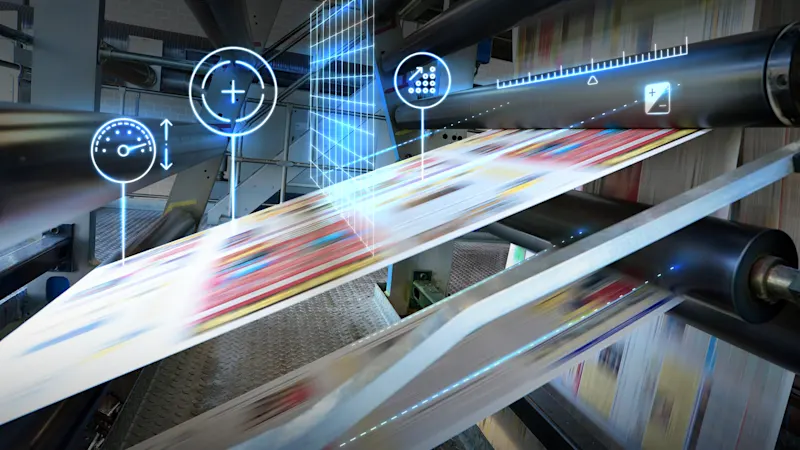

Line scan cameras work with a sensor that is made up of only 1, 2, or 3 pixel rows. The image data is exposed line by line, and also reassembled and processed into an image line by line.

Line scan cameras are used to inspect products and goods that are transported on conveyor belts, sometimes at very high speeds. Typical industries include printing, sorting, packaging, food and all types of surface inspection.

3D cameras

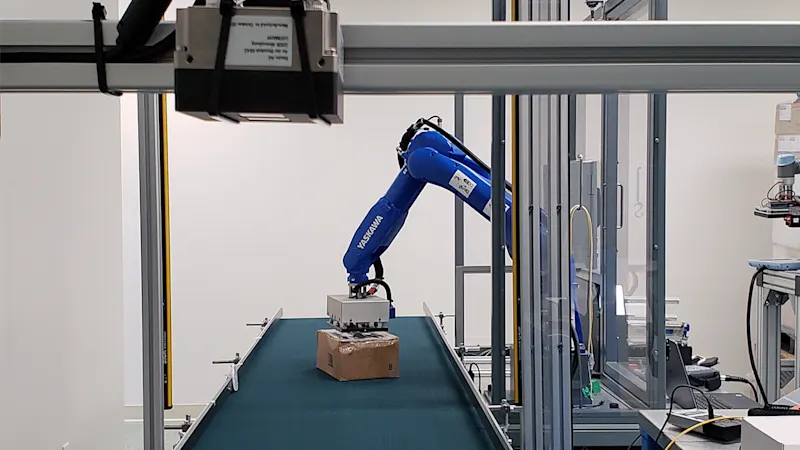

3D cameras, on the other hand, use advanced sensor technologies to capture depth information in addition to two-dimensional images. This is usually done using techniques such as time-of-flight (ToF), stereo vision, or structured light. These cameras measure the spatial position of objects and create a precise 3D model of the captured scene or object.

Applications for 3D cameras can be found in robotics, automation, logistics (e.g. for volume measurement or object recognition), medical (e.g. for surgical navigation), and the entertainment industry (for animations and virtual reality).

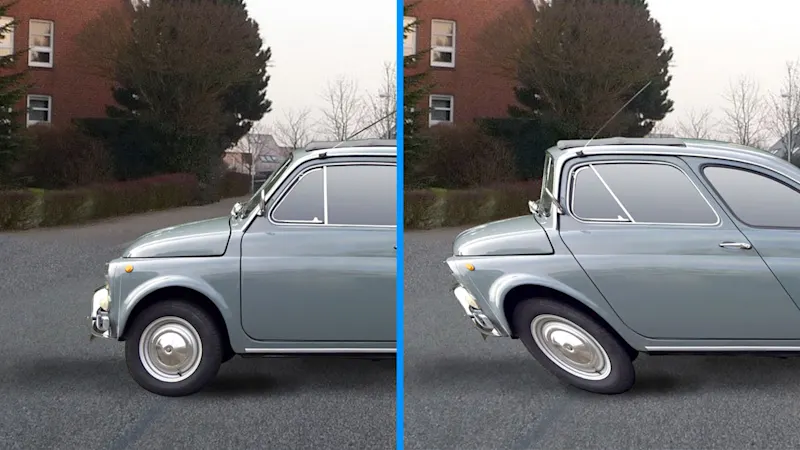

Decision #2: Monochrome or Color Camera?

A relatively simple decision and one that is usually answered by the goal of your application: the image you require. Do you need it in color to evaluate the results, or is black and white sufficient? If color isn't mandatory, then a monochrome camera is typically the better choice as they are more sensitive and deliver more detailed images. For many applications, for example in intelligent traffic systems, a combination of b/w and color cameras are also frequently used to satisfy the specific national legal requirements for evidence-grade images.

Decision #3: Sensor types, shutter technique, frame rates

This step involves picking a suitable sensor, built either around CMOS or CCD sensor technology, and choosing the type of shutter technique: global or rolling shutter. The next consideration is the frame rate, meaning the number of images that a camera must deliver per second to handle its task seamlessly.

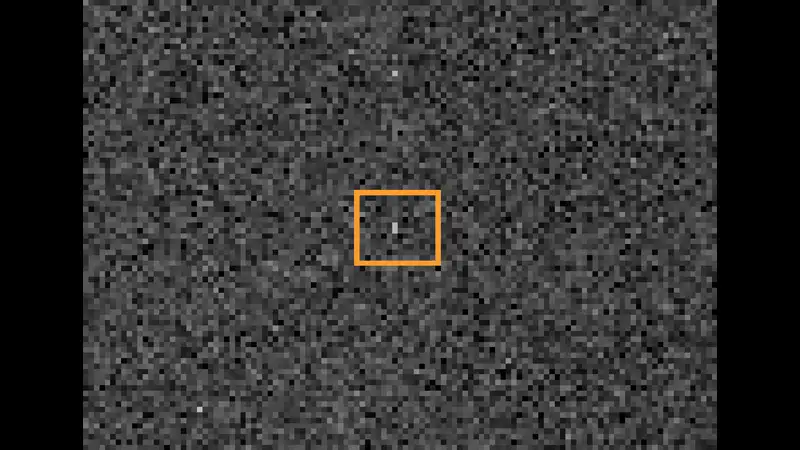

CCD or CMOS?

The fundamental difference between the two sensor technologies is in their structure.

CMOS sensors are fast, flexible, and dominate the camera mass market, e.g. in SLR cameras. They integrate the electronics directly on the sensor surface, which allows image data to be read out particularly quickly.

CCD sensors score points with maximum light sensitivity and excellent image quality – ideal for low-light applications such as astronomy. However, they reach their limits in fast-paced applications.

Learn more about CCD vs. CMOS!

Shutter techniques: global or rolling shutter

A simple but extremely important requirement must be met here: the closure technology must be suitable for the application. The shutter protects the sensor in the camera from incident light. It opens exactly at the time of exposure. The selected exposure time ensures that the right "dose" of light can penetrate by controlling exactly how long the closure must remain open. The difference between a global shutter and a rolling shutter lies in the way they handle that exposure.

To the shutter White Paper

Frame rate

The term "frame rate" is used interchangeably with "frames per second" (fps), or "line rate" (in line scan applications). The frame rate indicates how many images a sensor can capture and process per second.

The higher the frame rate, the faster the sensor works. A faster sensor allows for more frames per second, which in turn increases the volume of data.

With area scan cameras, the amount of data varies significantly depending on the interface used and the frame rate – from a low 10 fps to a fast 340 fps. The frame rate that is necessary or possible depends on the requirements of the respective image processing system.

Decision #4: resolution, sensor, and pixel sizes

When choosing the right camera, resolution, sensor size, and pixel quality play a central role. These factors have a direct impact on image quality, light sensitivity, and how well the camera can meet your specific needs.

Resolution

The resolution of a camera is often described with a specification such as "2048 x 1088". These numbers represent the number of pixels – in this case, 2048 horizontal and 1088 vertical pixels. Multiplied, this results in a total resolution of 2,228,224 pixels or 2.2 megapixels (million pixels, or "MP" for short).

To find out what resolution your application requires, a simple formula helps:

Resolution = Object Size / Size of the Detail to Be Inspected

For example, if you want to capture the eye color of a person who is about 2 m tall and show a detail of 1 mm, the calculation is:

Resolution = Height/(Eye detail) = (2,000 mm)/(1 mm) = 2,000 px in x and y = 4 MP

In this case, you'll need a camera with at least 4 megapixels to clearly display the detail you want.

Sensor and pixel size

Fact #1:

The easy part first: large sensor and pixel surfaces can capture more light. Light is the signal used by the sensor to generate and process the image data. So far, so simple. Now stay with us: The greater the available surface, the better the Signal-to-Noise Ratio (SNR), especially for large pixels measuring 3.5 µm or more. A higher SNR translates into better image quality. A SNR of 42 dB would be considered a solid result.

Fact #2:

A large sensor provides a larger space which can fit more pixels, producing a higher resolution. The real benefit here is that the individual pixels are still large enough to ensure a good SNR — unlike on smaller sensors, where there is less space available and thus smaller pixels must be used.

Fact #3:

Even large sensors with a high pixel count won't do much without the right lens (learn how to choose the right lens here). They can only reach their full potential if the lens they are combined with can actually resolve this high resolution.

Fact #4:

Large sensors are also always more costly, since more space means more silicon.

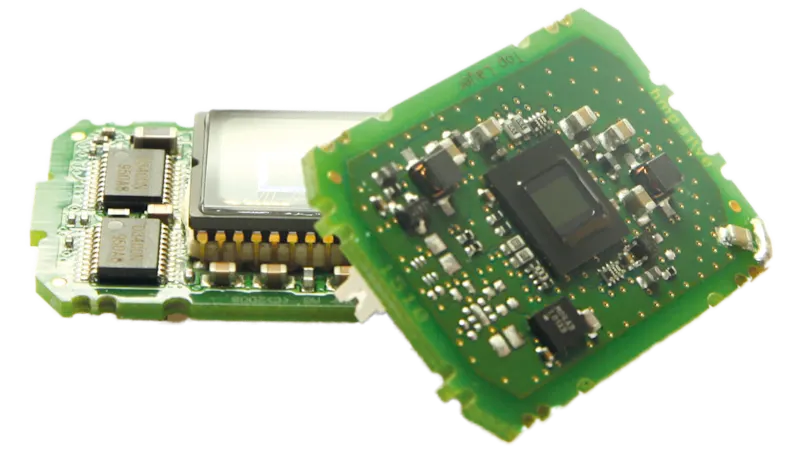

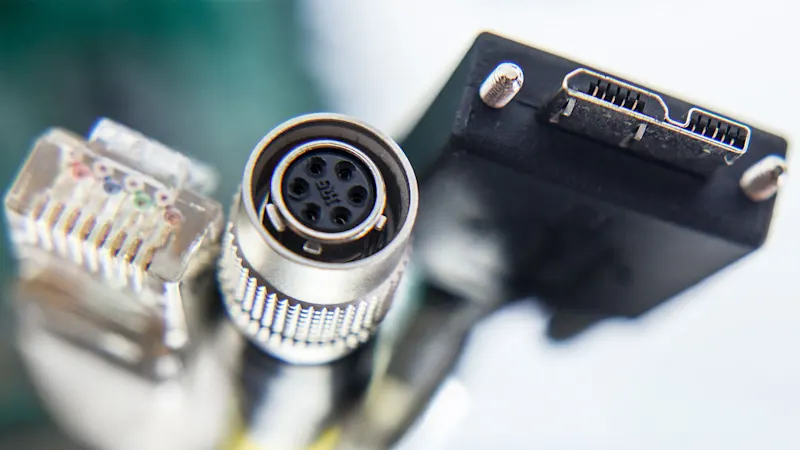

Decision #5: The interface and housing size

Choosing the right interface and body size plays a central role in integrating the camera into your vision system. These two factors influence not only the technical performance, but also the flexibility and compatibility of the overall solution. In the following, you will learn how different interface technologies and package sizes can optimally support your application.

Interface

The interface serves as the liaison between the camera and PC, forwarding image data from the hardware (the camera sensor) to the software (the components that process the images). Finding the best interface for your application means finding the optimal balance of performance, cost, and reliability by weighing a series of different factors against one another.

Modern standards such as GigE Vision, USB3 Vision, and CoaXPress ensure compatibility with standards-compliant components, while older technologies such as FireWire and USB 2.0 are less suitable for modern systems.

Housing

Directly tied to the choice of interface is the size of the camera housing. It is important in terms of the overall integration into the vision system. In applications where cameras are organized next to one another (known as multi-camera setups), every millimeter of space is crucial to properly record the entire width of a material web.

Basler cameras ranges from the small 29 mm x 29 mm housing size of the ace 2 and dart models to cameras with large sensors and larger dimensions, such as those of the boost series.

Decision #6: useful camera features

Cameras are often prepared at the factory to support their users in various tasks in the best possible way. All our cameras are factory-equipped with a set of helpful features that improve image quality, analyze image data more effectively, and control processes with the highest precision.

When designing your image processing system, you will most likely come across these three features:

AOI (Area of Interest)

Allows you to select specific, individual areas of interest within the frame, or multiple different AOIs at once. The benefit here is that only the parts of the frame necessary for assessing the image are processed, thus speeding up the read out of the camera data.

Auto features

Basler cameras offer a series of so-called Auto features, such as automatic exposure adjustment and automatic gain. By allowing the exposure time and gain parameters to adapt automatically to changing ambient conditions, these two Auto features keep the image brightness constant.

Sequencer

The sequencer is used to read out specific image sequences. This means, for example, that various AOIs can be programmed and then automatically read out sequentially by the sequencer.

What's the best way to compare modern CMOS cameras?

There is a considerable number of cameras from different manufacturers for almost every sensor model. Despite having the same sensor, the cameras are not identical. Which aspects are important when comparing cameras?

EMVA data are required

Selecting the right camera for an application is a central question that has also occupied the European Machine Vision Association (EMVA). The result: the EMVA 1288 standard. This defines methods for gathering data that characterize the image quality and sensitivity of a camera or sensor.

Comparing EMVA data is a must when choosing a camera, as it provides information about how powerful and suitable a camera is.

However, EMVA data does not cover all potential problems, such as image artifacts like the "shutter line" or time-varying disturbances such as defective or flashing pixels. These errors are often immediately noticeable to the human eye, but are not taken into account in the values.

A thorough and application-oriented test of a sample camera is therefore essential. It is helpful to rely on a reliable standard, which often only larger brand manufacturers offer. This saves testing time and simplifies application optimization.

Bonus: Firmware Features and High Data Transmission Stability

Cameras with the same sensor can also behave very differently because the cameras’ firmware and software vary. Conformity with standards such as GenICam would be important here ("addressing" the camera), as well as compatibility with the GigEVision and USB3 Vision interface standards. These standards regulate and define the communication channels and interfaces of the camera, which reduces integration effort while providing reliable quality during data transmission.

There can also be a variety of differences when it comes to the efficiency of the firmware and associated software. The first relates to the work required to integrate the camera: Not all camera makers can offer mature software and driver environments for controlling the camera or established programming environments (compatible with various operating systems and programming languages). These are, however, an absolute must for any major design-in.

The data stability can show additional differences. If the camera firmware is set up for a frame buffer, for example, this will enormously increase the data stability, especially with higher bandwidths/frame rates.

In large part, it is the standardized or proprietary features that can improve the performance of the vision system, although some achieve significantly better results from the same sensor.

How do I start? What's next?

Selecting the right camera is crucial to the performance of your machine vision system. Our tools help you find the right components for your vision system or application. Explore our portfolio and find the camera that perfectly suits your requirements.