Cameras for Fluorescence Microscopy OEM

Fluorescence microscopy is a very broadly used term covering numerous applications. This ranges from basic applications in the life sciences to highly developed techniques in which only very few photons or individual molecules are detected and localized by specific high-end hardware and software.

When you choose a camera for fluorescence microscopy, it is important to find a balance between certain sensor properties, camera-related aspects and the needs of the intended applications in science, medicine or industry.

Optical Format and Resolution

When you look at the visible wavelength range, the considerations regarding the optical setup do not differ significantly from normal light microscopy applications in terms of format, magnification and resolution. However, it is important to know that the overall cost increases when larger optical formats such as F-mounts are used. Most common is a C-mount, which offers a very good optical performance and for which most products and solutions are available at a reasonable price. The smaller S-mount is a good choice when instruments with limited sizes and lower costs are developed. Square sensors are often preferable for capturing the maximum image content.

Since the sensor plays a key role in an imaging system, it is very important to consider particular performance specifications when making a selection.

CCD, CMOS, sCMOS and BSI

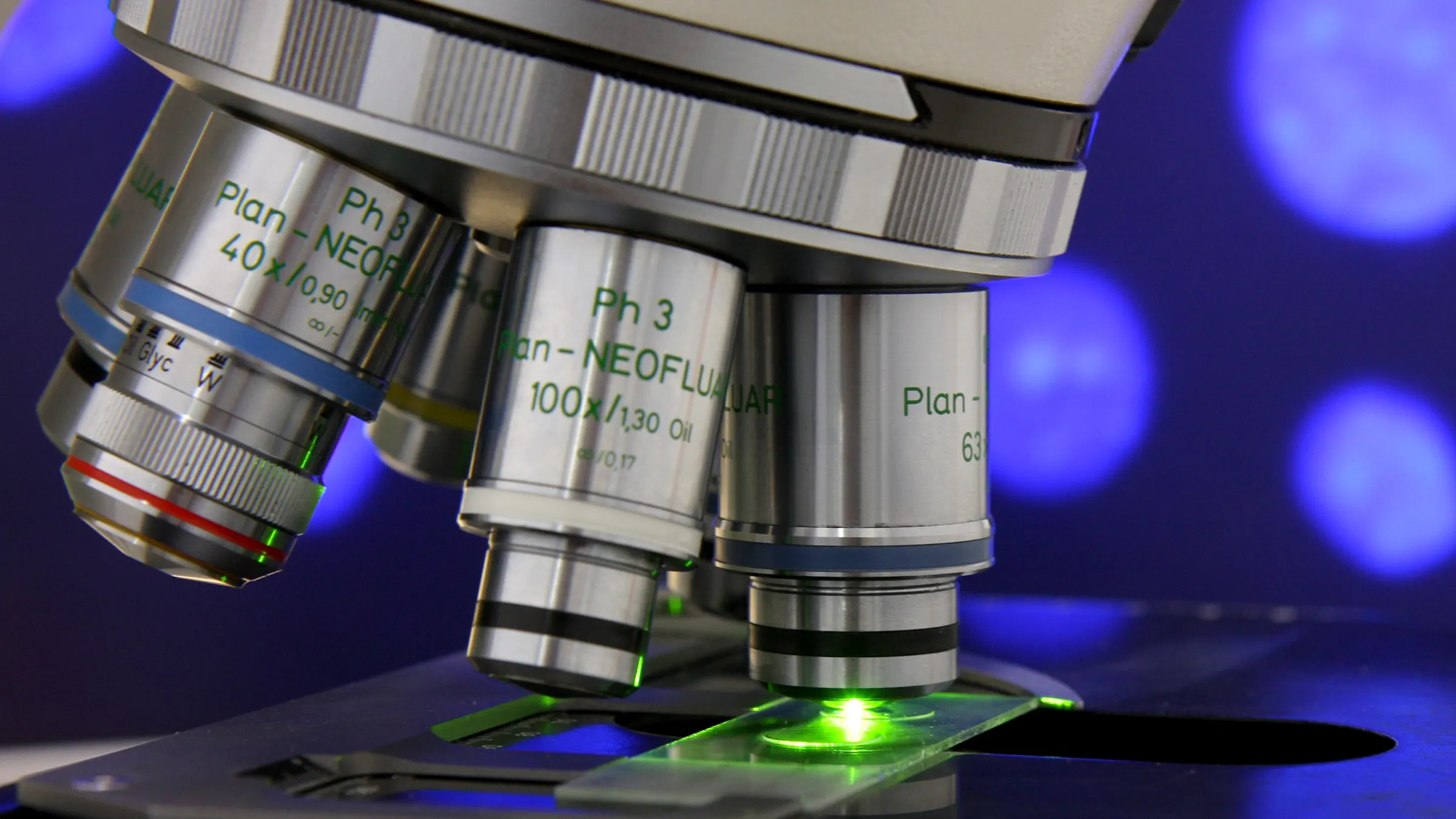

For a long time, CCD sensors were the established technology in the market for fluorescence microscopes. This has changed in recent years, even if they are still available in high-quality microscopy cameras. The newer CMOS technology has become increasingly prevalent in recent years and has also become competitive in respect to the special challenges in the scientific field. Noise levels are is now comparable to or even better than that of traditional CCD sensors; at the same time the new technology enables higher speeds, higher resolutions and lowered power consumption/heat dissipation requirements, all at lower prices.

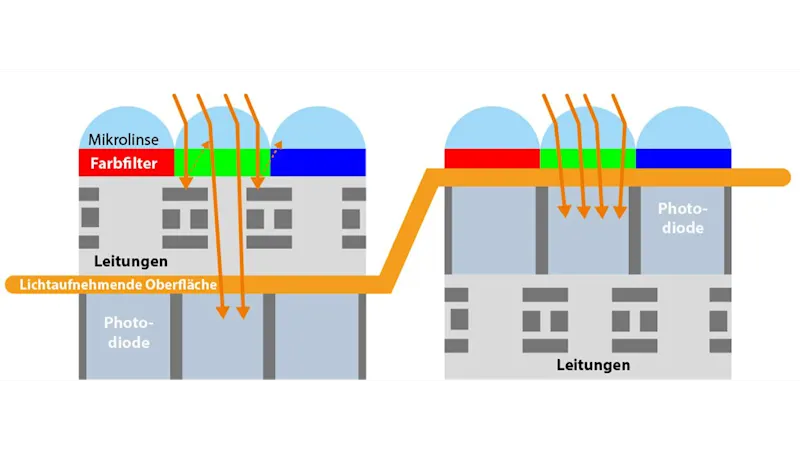

CMOS sensors are still developing rapidly. A technology called Backside Illumination (BSI) has found its way into industrial image sensors. This technology creates an inversion of the pixel structure to present the light-sensitive photodiode directly under the micro-lenses, which significantly increases the quantum efficiency of the pixels (Figure 1).

Monochrome or Color

Monochrome cameras are generally preferred for fluorescence applications due to their higher quantum efficiency. The technical factor driving this difference is that in color cameras, Bayer microfilters on each pixel let only certain wavelengths pass through. This filtering is needed to calculate color information of the image using a process called debayering. As the color filters block a certain amount of light, fewer photons reach the photon-reactive area of the pixel. In addition to the Bayer pattern on the sensors, the IR-cut filter in color cameras presents a limiting factor because it blocks light of approximately 650 to 700 nm upward (Figure 2).

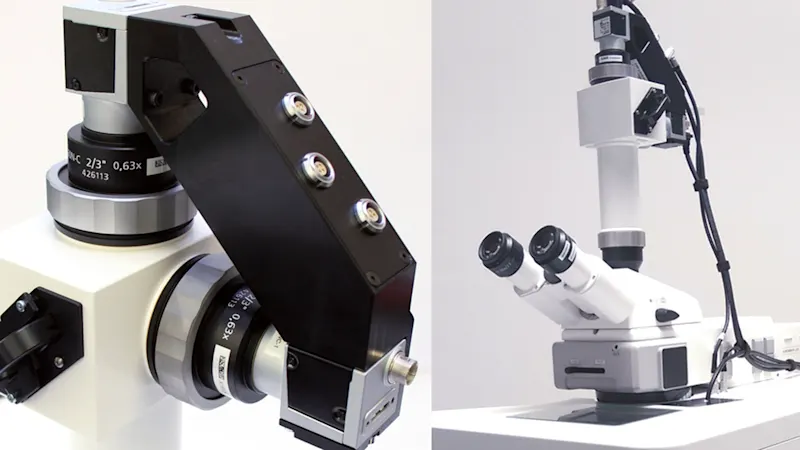

Typically, images with multiple fluorescence markers for specific detection and co-localization of molecules of interest are made from separate images using monochrome cameras. Selectable light sources and filter sets provide the right combination of excitation and emission wavelengths for each fluorophore used (Figure 3).

However, certain applications may create a demand to do color imaging and fluorescence within one instrument using only one camera. This is possible if the sensitivity demands of the fluorescence application are not too high.

Global and Rolling Shutter

CCD sensors have only one shutter type (global) while CMOS sensors are available in two types: rolling and global. Choosing the right sensor has a significant impact on image quality, especially when target objects are moving. In rolling shutter sensors, the pixels are exposed line after line. As a result, an object that has changed its position between signal capturing of two lines produces deviating image situations, generating space distortion in the image. A technical advantage of rolling shutter sensors is that they have fewer electronic parts in the pixel, which can result in less noise during readout. Meanwhile, global shutter sensors expose all pixels of the sensor at the same time. In this case, there is no time shift between the exposures of the different pixel lines, thereby generating no space distortions when objects are moving.

Sensitivity and Dynamic Range

Before taking a closer look at the quality of an image, it is important to ensure that the system is sensitive enough to capture the fluorescence signals, which can be very weak, depending on the individual application. Sensitivity should be understood as the minimum amount of light that is required to generate a signal that can be distinguished from noise. An important value is the quantum efficiency (QE) describing the ratio between the incident photons of the light source and the generated electrons of the pixel. It depends on the wavelength, and to get the best result, the spectrum of a given sensor should fit with the emission spectra of the fluorophores in the application. The higher the QE, the better the yield of photons, enabling shorter exposure times, reducing photo bleaching of fluorophores, and potentially improving overall imaging speed.

Often it is also beneficial to have a wide range of light intensities that can be resolved with one exposure. Here the full well capacity is relevant. It describes the maximum number of electrons that can be generated by one pixel per exposure. The higher the full well capacity, the more light can be captured before a pixel is saturated, reducing the requirement of additional exposures due to saturation.

Combining the maximum number of electrons with the lowest number of electrons required to produce a true signal (see “read noise” in the next section), the dynamic range characterizes a camera’s overall ability to measure and distinguish different levels of light.

Finally, there is the absolute sensitivity threshold, which is the number of photons required by one pixel to generate a signal-to-noise ratio (SNR) of 1

— meaning the signal is equivalent to the noise. The smaller this value, the less light is required to produce a true signal. Because it does not take into account the pixel size, it cannot be directly used to compare two cameras when their pixel sizes are different.

Image Quality and Noise

Noise is the deviation between the true signal value and the value that is produced by a measuring system. The SNR quantifies the overall noise of an imaging system at a certain light level and is a common parameter used to compare cameras. The higher the SNR, the better the image quality. In the imaging process, there are types of noise that can only rarely — if at all — be reduced by the camera technology (e.g., photon/shot noise, which is caused by the photons’ physical appearance). However, other noise types that influence image quality are significantly affected by the sensor itself and the camera technology. In recent years, the former CCD technology was surpassed in the areas of image quality and performance by modern CMOS sensors. Read noise — or temporal dark noise — is the noise added to a signal per one shutter event and is given in e¯/pixel. Modern CMOS sensors go down to a read noise of only 2 e¯/pixel (Figure 4).

Another noise source that is relevant for fluorescence applications becomes important when exposure times increase; it is caused by dark current. Dark current is the leakage of electrons during exposure and is expressed in e¯/pixel/s (Figure 5). As a rule of thumb, the dark current doubles with each temperature increase of 7 °C.

Noise types that describe not a temporal- but a space-related behavior are called fixed-pattern noise; this describes deviations that can be seen between different pixels. It can be caused by the pixel electronics or by inconsistent temperatures over the sensor area.

Standardized quantification measures of these noise types are the DSNU (dark signal nonuniformity), which describes the deviation of generated electrons without any light signal, and the PRNU (photoresponse nonuniformity), describing the pixel-to-pixel deviation at a certain light level. By setting cutoff values on pixel-to-pixel deviations, one can further differentiate and describe outlying pixels as defect pixels, such as hot pixels, that show high gray values without a corresponding signal. Certain camera manufacturers already correct defect pixels during quality control by interpolation of neighboring pixels so integrators are not impaired by these artifacts.

Interfaces

There are various interfaces on the market. To decide which interface is required, the following points should be considered depending on the application: Data/image rate, cable length, standardization, integration effort and costs. The interface technologies USB 3.0 (renamed 3.2 Gen 1) and GigE represent the current state of the art for integration into fluorescence microscopy-based systems. Vision standards are available for both interfaces and provide specifications developed by leading camera manufacturers to improve the design, effort and performance of vision systems for camera integrators.

USB 3.2 Gen 1 is the conventional and established plug-and-play interface with the simplest integration. It allows data rates of 380 MB/s, meaning e.g. 75fps at 5MP, which is sufficient for most applications. Cable lengths of up to several meters including power supply are supported, as is the integration of multi-cameras. GigE is used when longer cables and a more precise synchronization of multiple cameras is required. The bandwidth of GigE is 3.8× slower (100 MB/s) than the above-mentioned USB. For both interfaces, new versions with up to four times higher bandwidth have already been released. However, these still have to establish themselves on the market, which also requires the availability of corresponding hardware peripherals.

Cooling

The temperature of the sensor has a central influence on the dark current, which worsens the SNR and image quality – especially when the light signals are weak and longer exposure times are needed. This means that cooling the cameras can be important, but it is not absolutely necessary in fluorescence imaging. Since cooling measures significantly impact the system costs, the majority of cameras aren’t actively cooled, which is already sufficient for applications with good fluorescence signals. But even in these cameras, the design influences the sensor temperature. Heat generation should be avoided by operating the camera with low power consumption. Additionally, the heat should be efficiently transported to the outside via the internal hardware design and by mounting the camera on another heat-dissipating carrier.

Thermoelectric (Peltier) elements are used to actively cool a sensor and usually an integrated fan dissipates the heat generated by the Peltier element to the outside. The fan also helps prevent condensation moisture when temperatures fall below the ambient temperature. If it is necessary to prevent vibrations (which can be caused by the fan) in the system, some cameras can even be water-cooled.

Improvements Through Firmware

Beyond the hardware and sensor specifications, the cameras can offer firmware features that improve the image quality in low light conditions.

One example is the defect pixel correction. To this end, the manufacturer operates the camera at different exposure times during the final inspection and defective pixels are located and stored in the camera’s cache. In operating mode, the values of the defective pixels are interpolated by the weighted sum of the neighboring pixels. This helps improve the image quality and SNR.

The current CMOS sensor generations enable applications that were not previously possible without investing several thousand euros in a CCD camera. The importance of these new possibilities continues to rise, since fluorescence is becoming an increasingly used tool in the life sciences to visualize structures and processes.

Fluorescence in the Field

Fluorescence is a physical phenomenon and not just a specific technology. The possible methods – e.g. for analytics, quantitative determinations or visualizations used in the life sciences – are almost infinite. Fluorophores can be coupled to various carriers such as proteins (often antibodies), nucleic acids or microparticles. But they can also be integrated as gene technology markers in organisms in order to examine cell-biological functions and processes. Aside from the life sciences, fluorescence-based methods are also used in other areas, such as material analysis or forensics. The following examples show the versatile application options for fluorescence.

In the in-vitro diagnosis of autoimmune or infectious diseases, the technology of indirect immunofluorescence microscopy can be used to detect specific antibodies in the patient’s blood.

In addition to manual microscopy, there are already automated systems that give lab physicians suggested findings based on fluorescence patterns of the cells incubated with patient serums, as evaluated by software (Figure 6). Another system, in turn, analyzes patient serums on malaria pathogens in less than 3 minutes. The analysis is performed with vision-based algorithms that also take fluorescence signals into consideration.

Point-of-care systems are increasingly gaining significance in medical diagnostics. Among other things, they make it possible to establish better medical care even in economically and infrastructurally weak regions, thanks to simple and inexpensive applications. Lab-on-a-chip technologies enable the processing of patient samples on a small chip, without requiring complex lab equipment.

In surgical microscopy, surgeons are increasingly supported by specific fluorescent markings of blood vessels or tumor tissue, enabling them to operate with perfect precision with fluorescence-guided surgery. Dentists can also offer faster and more specific treatment, for example by selectively visualizing tooth areas affected by caries during treatment. Last but not least, fluorescence-microscope applications are used in pathology to examine tissues from patient biopsies for possible diseases.

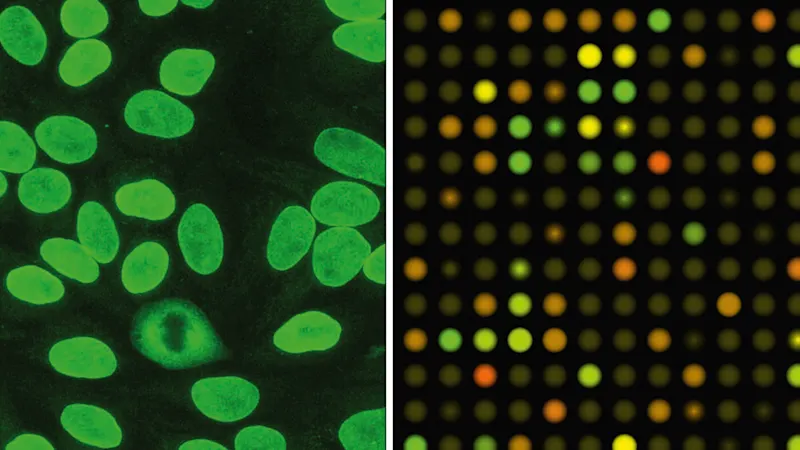

The life sciences offer a broad range of fluorescence-based applications in which microscopic examinations have a significant share. Immunofluorescence microscopy enables specific detection of particular proteins — for example, to detect or clarify their localization in cells and tissues or as markers for beginning cell death, depending on particular test conditions (Figure 7). Nowadays, live cell imaging can also be performed for longer time periods on automatic systems.

Miniaturization and parallelization to increase analysis numbers are especially significant in pharmaceutical research, since a very high number of samples are screened analytically in the search for new active substances. This is where microarrays and high-content screening systems are used (Figure 8).

With automatic colony counters, fluorescence markers can be used in petri dishes to select successfully transfected cells to subsequently pick a sample of the respective colony. This means it is verified whether particular genetic material was actually transferred to the cell as part of an experiment, and the researchers can continue using this for their research.