Deep Learning Vision Systems for Industrial Image Processing

System structure and components

Deep learning vision systems are often already a central component of industrial image processing. They enable precise error detection, intelligent quality control, and automated decisions – wherever conventional image processing methods reach their limits. We show how a functional deep learning vision system is structured and which components are required for reliable operation.

Last updated: 12/02/2025

The system structure of deep learning vision systems

Deep learning vision systems are designed from the ground up for neural networks. They rely on GPU-based computing power, optimized frameworks, and end-to-end learning approaches. This makes them flexible, but often also resource-intensive.

The goal: end-to-end AI integration from image acquisition to decision-making

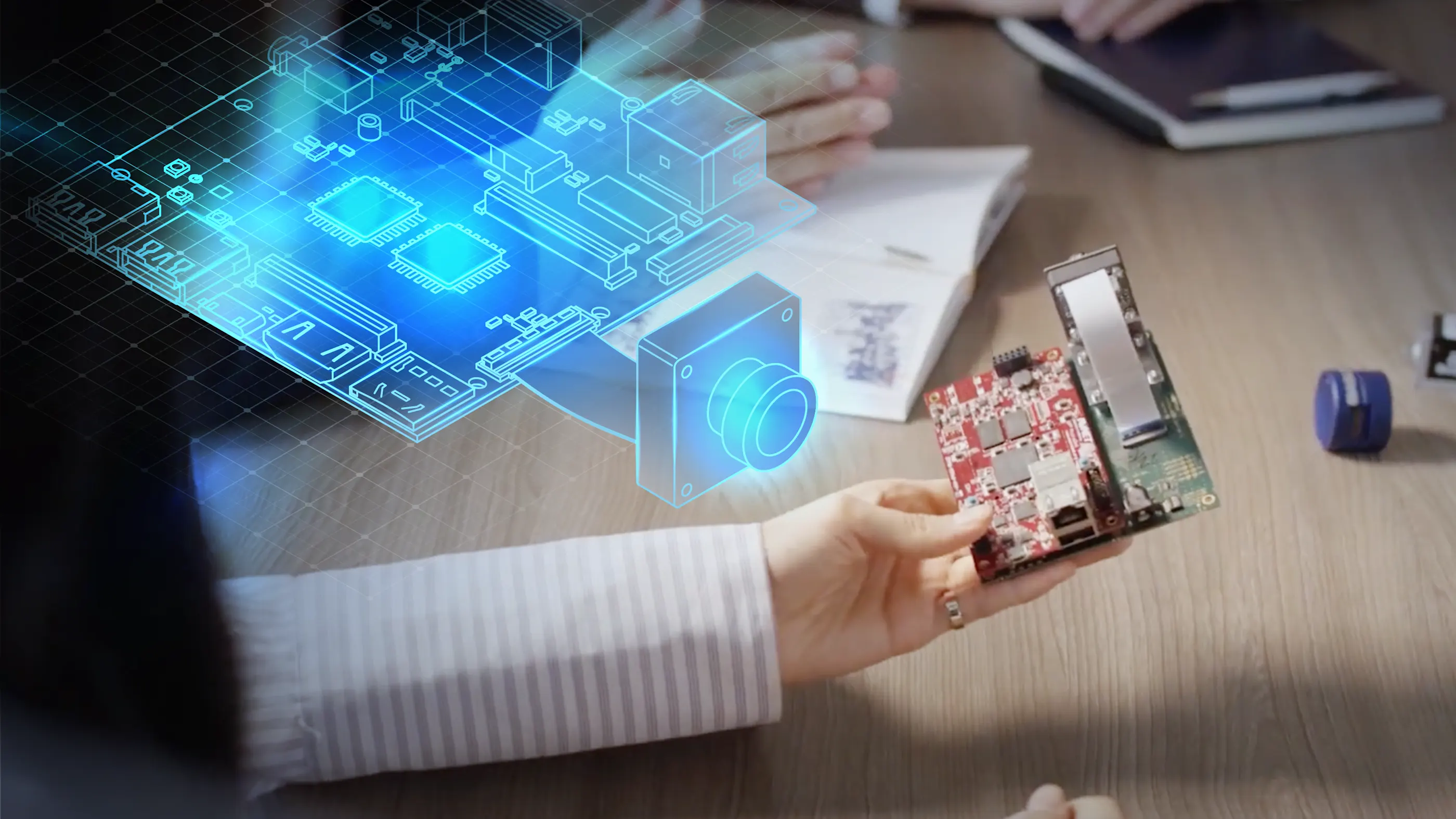

The main goal of a deep learning vision system is the seamless integration of artificial intelligence across all process steps. From

capturing raw data with the camera to the

real-time processing of image data to

automated decision-making with the AI model,

all components are optimized for deep learning. This creates a closed system that delivers precise, reproducible, and scalable results for demanding industrial applications.

Deep learning vision pipeline: from image acquisition to AI-supported decision-making

Proper interaction of the system components is crucial for the performance of a deep learning vision system. The typical workflow in a deep learning vision system takes place in these successive process steps:

1. Image acquisition: The machine vision camera captures the raw image and delivers high-quality image data.

2. Image transmission: A frame grabber forwards the image data efficiently and loss-free to the processing hardware.

3. Pre-processing: The pylon software or internal camera functions optimize the image (e.g. noise reduction or debayering). The deep learning software takes over the control, configuration, and analysis of the data using AI models.

4. AI inference: The CNN model analyzes the image and makes a decision (e.g. error detection).

5. Result transmission: The results are forwarded to the controller or the higher-level system.

Interfaces and integration solutions ensure smooth communication between the modules and enable integration into existing production environments. This process ensures fast, reliable, and reproducible image analysis in industrial applications.

The hardware and software components of a deep learning vision system

A deep learning vision system consists of several technically coordinated components. Each component performs a specific task within the overall system and contributes to its performance and reliability.

Deep learning vision hardware

The image processing hardware is the data center of the deep learning vision system. The choice of hardware depends on the requirements in terms of processing speed, system costs, and scalability. Different platforms are used depending on the application:

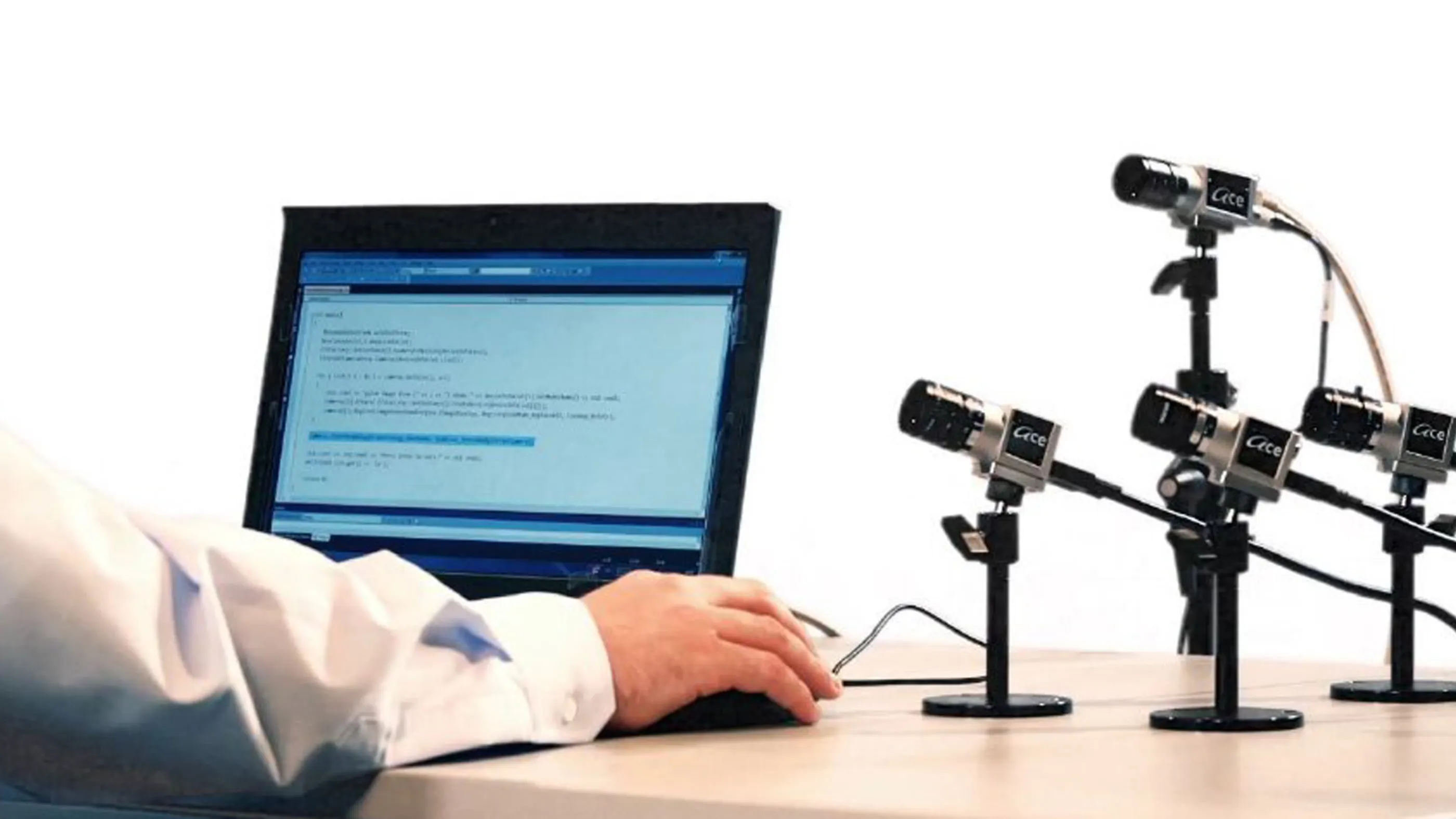

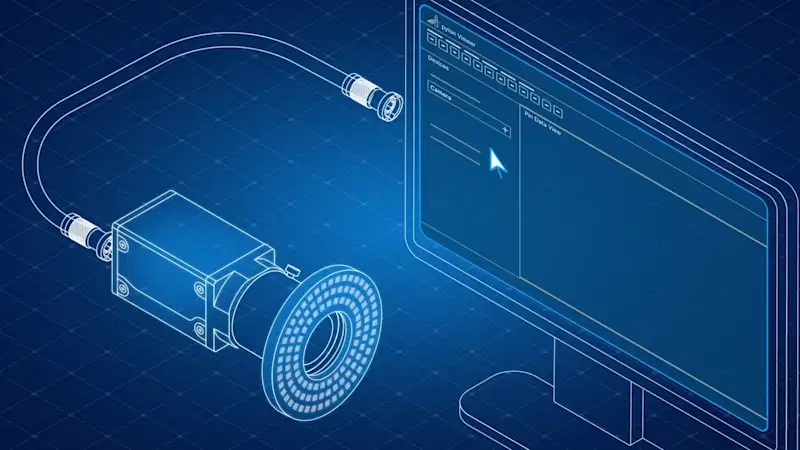

Machine vision camera

The machine vision camera is the heart of the system. It captures the image data that is later processed by the AI model. High image quality is crucial for precise inference results. Industrial cameras such as the Basler ace, Basler ace 2, Basler dart or Basler racer series offer:

High resolution and image quality

Support for common interfaces such as GigE, USB 3.0, and CoaXPress

Internal image pre-processing (e.g. de-bayering, sharpening, noise reduction)

Reproducible results for reliable deep learning applications

Frame grabber and image data management

A frame grabber is indispensable for applications with high data throughput or real-time requirements. Frame grabbers capture the image data directly from the camera and forward it to the system for further processing. Especially in combination with FPGA processors, they enable latency-free, robust, high-speed image acquisition and processing.

Deep learning software and tools

The software forms the link between the hardware and the AI model. It enables the integration, configuration, and control of the cameras as well as the training and execution of deep learning models.

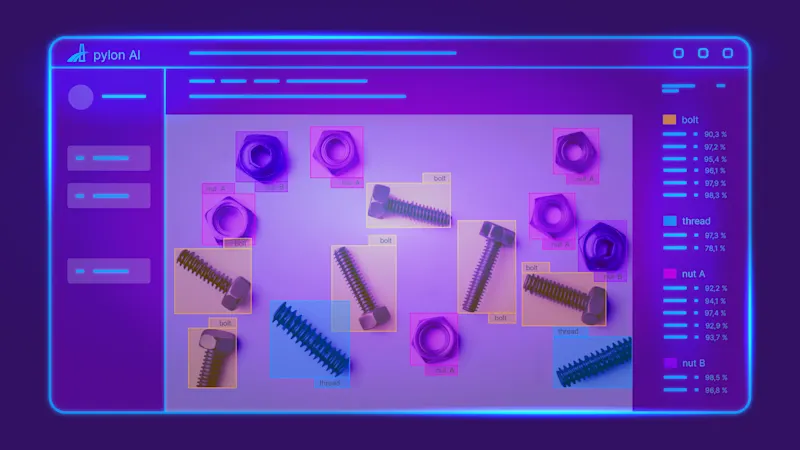

pylon AI

pylon AI is a powerful platform that was specially developed for the efficient integration and execution of Convolutional Neural Networks (CNNs) in industrial image processing workflows. pylon AI enables the simple integration, optimization, and benchmarking of your own AI models directly on the target hardware.

pylon vTools for Image Processing

Combined with pylon AI, the pylon vTools offer ready-to-use, application-specific image processing functions such as object recognition, OCR, segmentation, and classification - without in-depth programming knowledge. vTools are available based on classic algorithms and artificial intelligence.

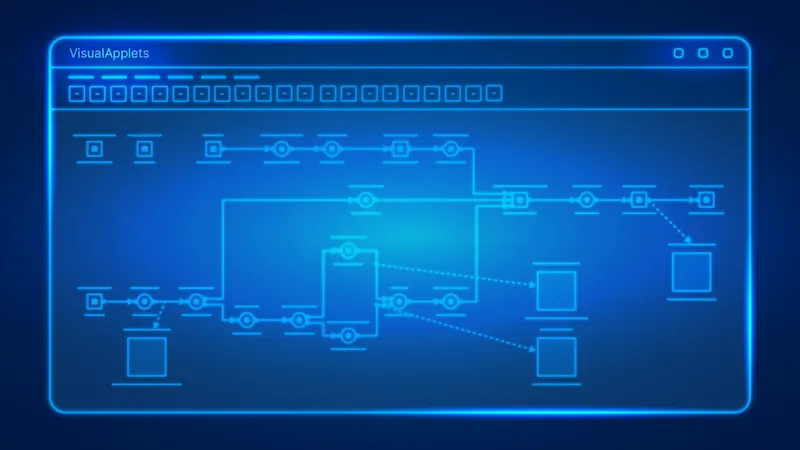

VisualApplets for FPGA programming

For FPGA-based systems, VisualApplets offers an intuitive, graphical development environment where image pre-processing steps can be flexibly implemented at the hardware level. This combination ensures maximum flexibility, scalability, and precision throughout the deep learning vision system. FPGA-based pre-processing allows, for example, large amounts of data to be reduced and prepared in a targeted manner before the actual inference by selecting only the relevant image areas (region of interest, ROI) for further processing.

Benefits: reduced computing load, higher inference throughput with the same hardware, and increased accuracy by focusing on the essentials.

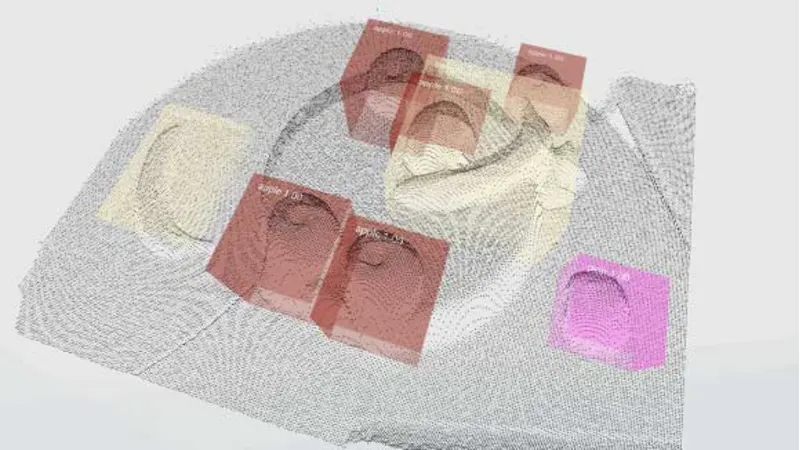

Inference through the AI model

During the inference phase, a CNN (Convolutional Neural Network) usually takes over the analysis of the incoming image data. The model processes the images captured by the machine vision camera in several successive layers to extract relevant features such as shapes, edges, or textures. This is followed by classification, segmentation, or object recognition - depending on the task at hand.

With pylon AI and the pylon vTools, this process is automated and occurs in real time: The image data is directly transferred to the AI model, which then identifies faulty components, reads text on products (OCR), or localizes specific objects in the image, for example.

The results of the inference are immediately available for downstream processes such as sorting, quality control, or process optimization. Seamless integration into the deep learning vision system ensures fast, precise, and reproducible decision-making.

The quality of the model depends largely on the quality of the training data and the optimization for the hardware used. The highest possible image quality is therefore not only important in the image acquisition process step - it forms the basis for training the AI. The higher the quality of this image data during training, the more precise and reliable the results of the AI analyses and the decisions derived from them will be.

Models that have already been pre-trained can be easily integrated and further developed with pylon AI.

System integration and interfaces

Decisive for the performance of deep learning vision systems

The successful implementation of deep learning vision systems in industrial image processing depends to a large extent on well thought-out system integration and selecting the right interfaces. Efficient communication between the AI model and hardware, as well as smooth integration into the production process, are of central importance here.

Seamless hardware-software communication

The pylon software provides certified drivers and powerful interfaces that ensure direct and reliable communication between the AI inference and the camera hardware. These include standards such as GigE Vision for flexible network solutions, USB3 Vision for uncomplicated connectivity and CoaXPress for applications with the highest bandwidth and real-time requirements. These standardized interfaces minimize the integration effort and ensure stable data transmission.

pylon AI offers a powerful solution by enabling the integration of Convolutional Neural Networks (CNNs) directly into the established pylon image processing pipeline. This ensures robust and efficient data processing.

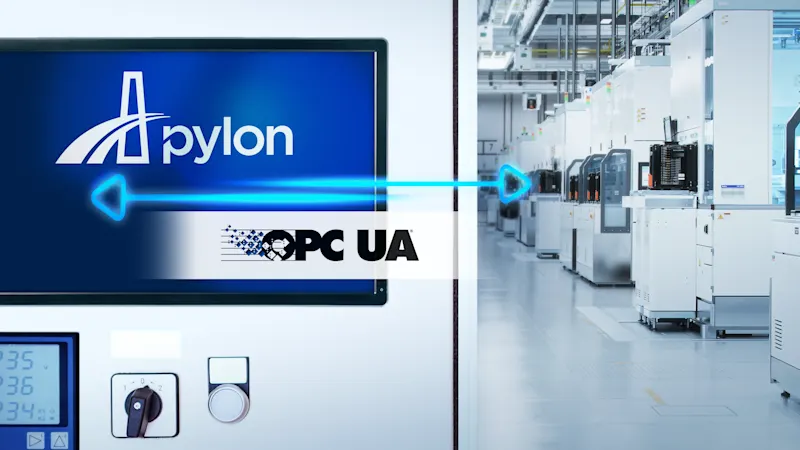

Industrial connectivity

Support for OPC UA is essential for connecting to higher-level control systems. It enables the direct transfer of AI results to PLC or MES systems. As a platform- and manufacturer-independent standard, OPC UA ensures simple and standardized data exchange between machines. With the OPC UA vTool, you can publish results from the image processing pipeline directly to an OPC UA server for seamless data exchange.

A Recipe Code Generator can also facilitate the rapid adaptation of AI models to changing product variants and thus increase flexibility in production. Detailed information on the Recipe Code Generator in the pylon Viewer can be found in the Basler Product Documentation.

Flexible architectures: edge computing and cloud integration

The requirements for deep learning vision systems vary greatly depending on the application. This makes flexible architectures essential:

Edge computing for decentralized applications

For latency-critical, mobile, or decentralized applications, embedded vision technology offers the ability to run AI models directly at the edge". Platforms such as NVIDIA® Jetson™ enable AI models to run instantly on the device, ensuring maximum autonomy, minimal latency, and reduced dependency on network connections."

Cloud integration for scalability

For applications that require large amounts of data, distributed training, or centralized management of many systems, we support integration with leading cloud platforms, such as: Amazon Web Services (AWS), Microsoft Azure and Google Cloud Platform. This provides the necessary scalability and flexibility for complex deep learning workflows.

This standardized and flexible system integration ensures fast, reliable, and reproducible analysis of image data. It enables the integration of deep learning vision systems into distributed production environments so that AI-supported analyses and decisions are made directly where the image data is generated. This is crucial for efficient quality control, error detection, and process optimization in complex, multi-site production networks.

Straightforward installation and reliable system integration are essential for long-term success and help to master complex tasks efficiently.

A functional deep learning vision system generally consists of a high-quality machine vision camera, a powerful frame grabber, suitable image processing hardware, specialized deep learning software, and an optimized AI model. Reliable, high-performance interfaces ensure a smooth system integration process. With our products and services, we offer vision engineers and anyone involved in AI solutions for their application a solid basis for sophisticated industrial image processing projects - from prototype development to series production.