Integrated Focus Stacking Solution Delivering Extended Depth of Field for AOI Systems

Focus stacking extends the depth of field by capturing multiple images at different focal positions and combining their sharpest regions into a single image. When combined with liquid lens autofocus, focus stacking algorithms enable fast, high-magnification inspection of microstructures with varying surface heights, delivering a fully focused 5.1 MP image in just 67 ms . However, developing and implementing such solutions involves significant technical challenges.

Focus stacking: an important technique to extend depth of field

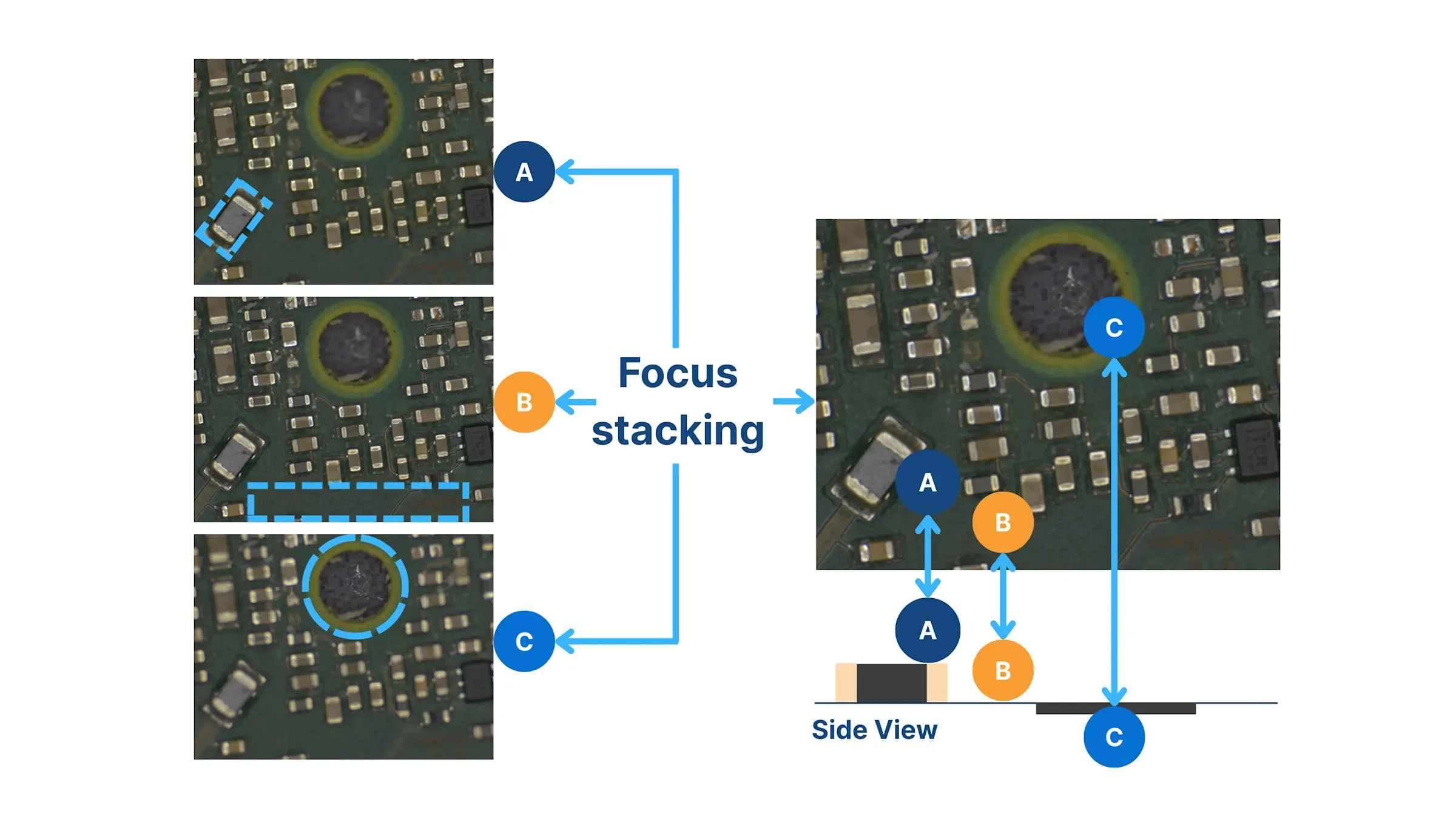

Focus stacking—also known as depth composition or focus composition—captures multiple images at different focal positions and merges their sharpest regions into a single all-in-focus image. It is a valuable technique in high-magnification machine vision, particularly in AOI systems for semiconductor and electronics inspection.

These systems are often required to image components with significant height variations—such as PCB parts, solder joints, traces, and IC structures—that can span from 5 to hundreds of micrometers within a single field of view. These 3D features are typically the most critical to inspect, yet also the most difficult to capture clearly in a single frame. Focus stacking addresses this challenge by generating extended-depth images that enable reliable, real-time inspection.

Making focus stacking work in AOI: an integrated solution

While focus stacking offers a compelling way to extend depth of field without sacrificing resolution, turning it into a production-ready AOI solution presents significant engineering challenges. Beyond image quality, inspection speedbecomes a key concern, especially for in-line systems that demand fast cycle times. For vision engineers, every stage—from hardware synchronization and image capture to algorithm optimization and real-time processing—must be carefully designed and validated to meet both precision and throughput requirements.

Faster than mechanical Z-stage: speed and simplicity with liquid lens

Compared to traditional Z-stage mechanisms, liquid lens autofocus technology offers clear benefits in speed, robustness, and integration. Focus adjustments happen within milliseconds, with no moving mechanical parts—making the system more compact and less prone to wear. This makes liquid lens–based focus stacking especially suitable for high-throughput AOI systems that demand speed, reliability, and space efficiency on the production line.

Liquid lens control without additional I/O boards or programming

To integrate a liquid lens into an AOI system, manufacturers typically need a dedicated I/O hardware and software development for lens control and camera synchronization. Additionally, limited programming language support from liquid lens manufacturers can create compatibility issues with existing development environments.

Basler eliminates these complexities by embedding liquid lens control algorithms directly in camera firmware. This approach removes the need for external I/O hardware, additional cabling, and custom software development. System integrators can control the lens through Basler's pylon Viewer interface using simple parameter adjustments or slider controls.

Focus stacking: a pixel-level, compute-intensive process

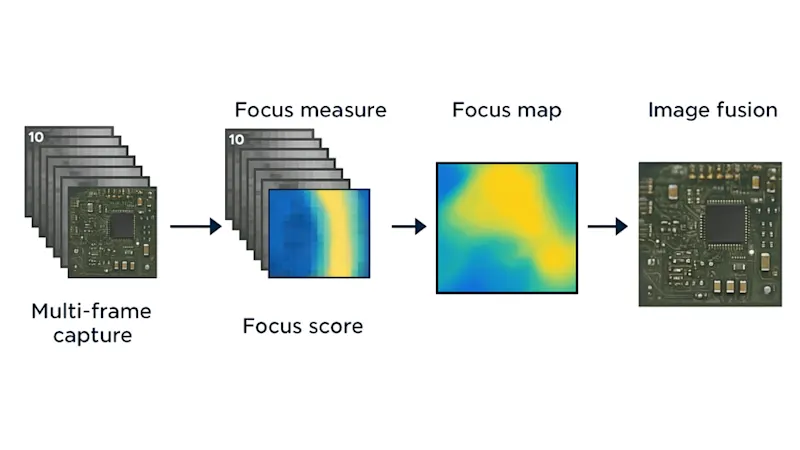

The focus stacking pipeline includes several compute-intensive steps:

Multi-frame image capture: Acquire 5–20 images at different focus depths

Focus measure: Score sharpness per pixel across layers using algorithms like Laplacian or gradient variance.

Focus map generation: Build a map showing the sharpest layer at each pixel

Image composition: Merge the sharpest regions while smoothing transitions

Real-time stacking: 67 ms per stack, zero CPU load

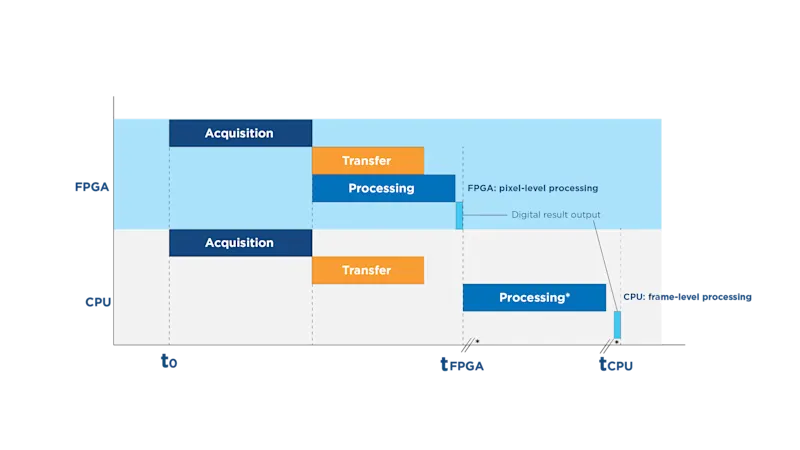

Focus stacking algorithms often take weeks or months to optimize for reliable PC performance, yet pixel-level processing of 10+ images still consumes significant CPU resources and time.

With Basler’s FPGA implementation, a fully focused image from 10 captures (5.1 MP, 212 fps) is generated in just 67 ms, meeting real-time AOI production requirements.

In contrast, running a similar setup on a CPU takes over one second per image, far too slow for inline use.

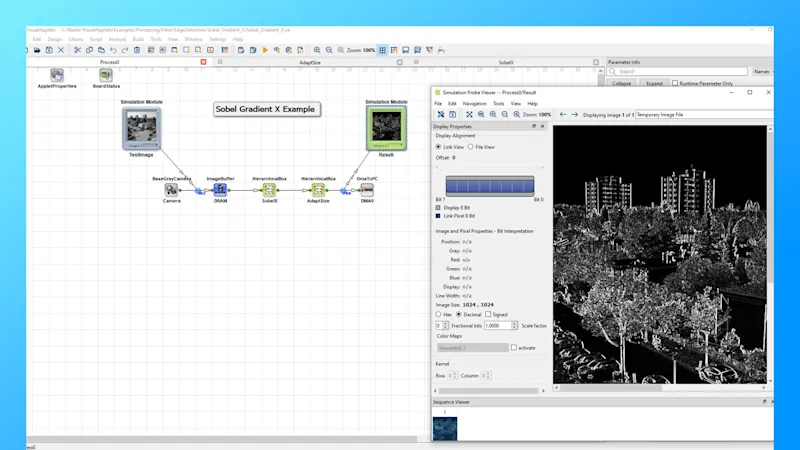

Product highlight: FPGA as a co-processor with VA for accelerated development

Basler’s solution leverages an FPGA as a co-processor on a programmable frame grabber, delivering real-time, pixel-level image pre-processing while keeping host CPU overhead to a minimum.

With VisualApplets, our graphical FPGA programming tool, we support AOI system makers by allowing them to quickly prototype and deploy focus stacking algorithms—no HDL coding required.

This setup allows:

Real-time pixel-level processing performance

Minimal CPU overhead

Seamless integration with Basler cameras and liquid lens control

Shorter development cycles through modular, reusable IP blocks

Focus stacking algorithms are based on passive Z-axis focusing, which makes them more flexible and cost-effective. In one project with tight precision requirements, we delivered a working concept in just one week using VisualApplets. For higher accuracy, we can scale the algorithm with more advanced processing.

Accelerating AOI system development with integrated solutions

AOI system developers often face tight timelines while managing diverse hardware and complex integrations. Basler’s focus stacking vision solution module is designed to ease that burden, streamlining the entire imaging workflow for AOI system integration.

By integrating lens control and focus stacking algorithms directly into vision hardware FPGAs, our solution reduces development effort, speeds up time-to-market, and ensures reliable in-line performance.

Key benefits:

Reduced CPU overhead, even for such compute-intensive tasks as focus stacking run entirely on dedicated FPGA hardware.

Streamlined integration with reduced I/O wiring, no external control modules, and no intensive imaging algorithm development.

Faster time to market, thanks to accelerated development cycles and fewer system-level bottlenecks.