Vision Interfaces for Robotics

Integrating machine vision into robot applications

Modern interfaces enable communication between vision systems and robot controls. 3D vision is particularly significant in industrial robotics. Interfaces such as REST API, ROS, OPC UA, gRPC, and generic interfaces with vendor-specific plugins in robot control software ensure stable data transfer between 3D vision systems and robots, enabling precise control.

Last updated: 15/10/2025

Applications and advantages of 3D vision in robotics

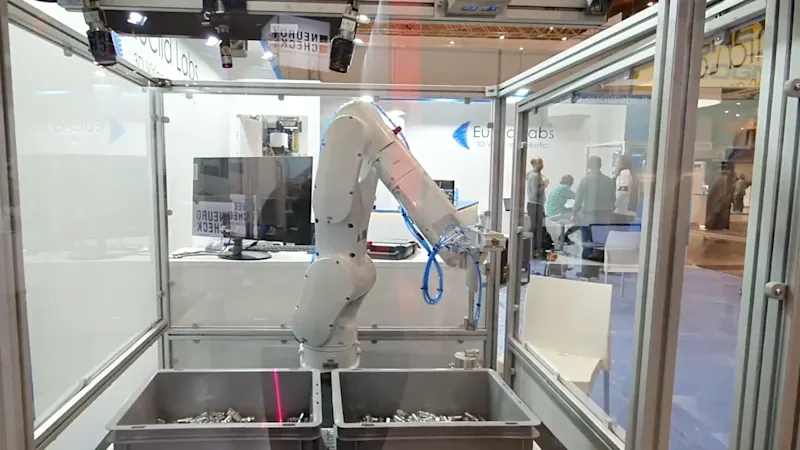

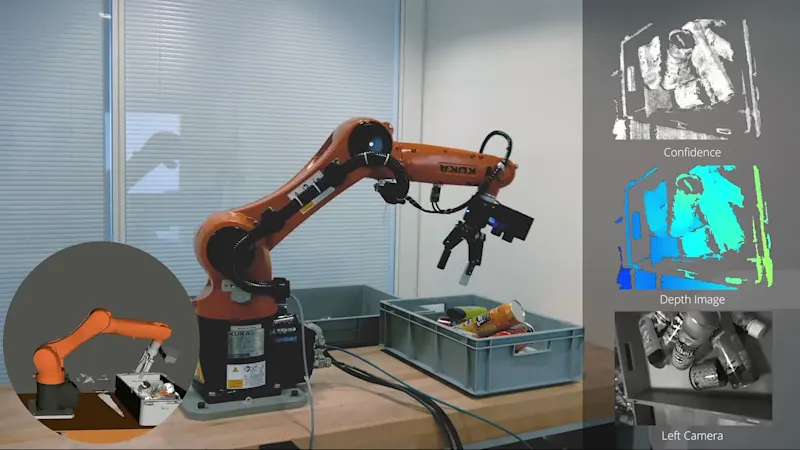

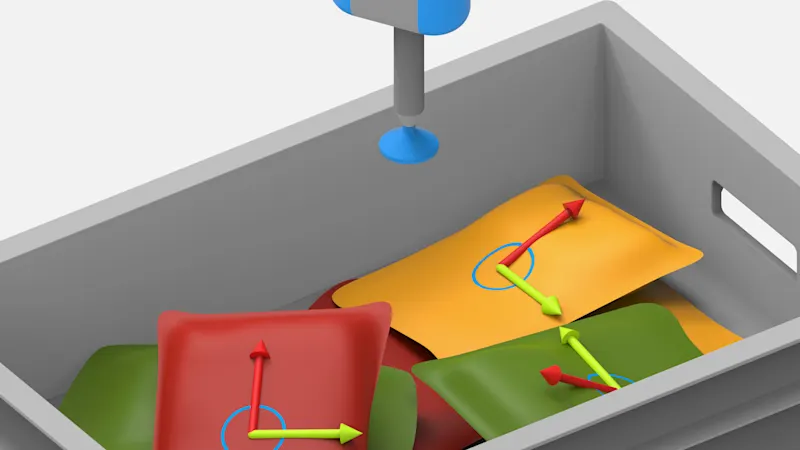

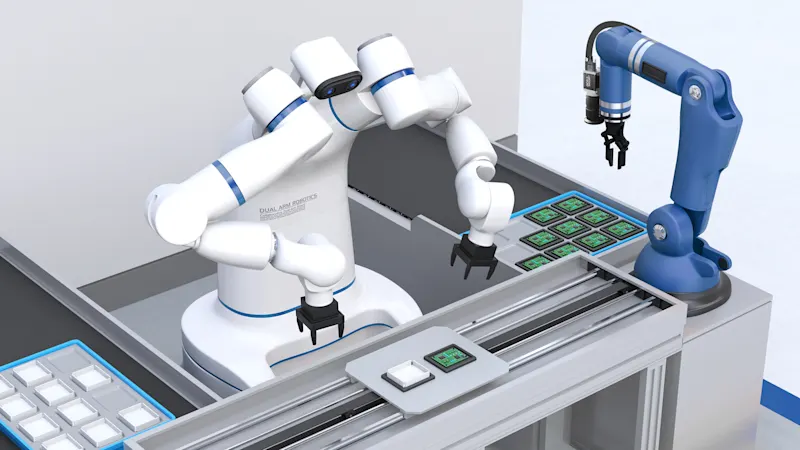

Machine vision systems perform key tasks in robotics that go far beyond pure image capture. They enable the precise recognition of objects, determine position and orientation, and provide reliable quality control. By using 3D vision technologies, pick-and-place applications, inspection tasks, and flexible automation solutions can be implemented efficiently.

3D image processing offers robots decisive advantages over classic 2D solutions. Thanks to the captured depth information, robots can recognize not only the position of objects, but also their spatial position and orientation. This enables precise gripping, even in complex or overlapping scenarios. 3D vision supports reliable navigation in space, safe avoidance of obstacles, and flexible adaptation to changing environments. As a result, 3D vision systems significantly increase the process reliability, efficiency, and versatility of modern robotics applications.

Interfaces for integrating vision systems

Selecting the right interface is crucial for successfully integrating machine vision into robotics applications. In addition to standardized interfaces, a range of manufacturer-specific plugins and bridges are available. Machine vision systems and robot controllers can also work together efficiently via direct communication channels.

This chapter provides an overview of

the most important interfaces,

technologies, and protocols that have proven themselves in practice

and what to pay particular attention to when selecting and implementing them.

Standardized interfaces

REST API

The REST API (Representational State Transfer Application Programming Interface) is a widely used interface for integrating machine vision systems into robot applications. It enables standardized, platform-independent data exchange between the image processing component and the robot controller. Image data, position information, or status messages, for example, can be retrieved and commands can be sent to the vision system via HTTP requests.

A major advantage of the REST API is its flexibility: it can be easily integrated into existing IT infrastructures and is compatible with numerous programming languages. This means that both PCs and programmable logic controllers (PLCs) can be used as communication partners. Integration usually takes place via existing libraries and tools, which reduces the development effort.

REST APIs are particularly suitable for applications that require reliable and structured communication between the vision system and the robot. Typical application areas include transmitting pick-and-place coordinates, retrieving quality data, or controlling image acquisition processes. The use of open standards ensures future-proofing and makes it easier to connect to different robotics platforms.

ROS and ROS 2

ROS (Robot Operating System) and ROS 2 are open middleware platforms developed specifically for the development and integration of robotics applications. They offer standardized communication interfaces that enable a seamless connection between machine vision systems and robot controllers. With ROS and ROS 2, sensor data, image information, and control commands can be exchanged efficiently and complex automation tasks can be implemented flexibly.

Thanks to broad support in the robotics community and the availability of numerous open source packages, vision components can be quickly integrated and individual solutions realized. ROS and ROS 2 offer a high degree of flexibility and scalability, particularly in research and development environments and in prototyping.

OPC UA

OPC UA (Open Platform Communications Unified Architecture) is a manufacturer-independent communication standard for industrial automation. It enables the secure and reliable exchange of data between machine vision systems, robots, and other automation components. OPC UA supports complex data structures, method calls, and offers high interoperability, allowing machine vision solutions to be flexibly integrated into existing production environments.

Typical application areas include transmitting process data, status information, and control commands in real time, particularly in networked Industry 4.0 applications. The Basler Stereo visard 3D camera offers an OPC UA interface for 3D vision solutions. The OPC UA server can be activated via a license update.

gRPC

gRPC is an open, cross-platform Remote Procedure Call (RPC) framework maintained by the Cloud Native Computing Foundation. gRPC differs from other standardized interfaces, such as REST API or OPC UA, by using the efficient HTTP/2 protocol and binary data transmission with protocol buffers. As a result, gRPC enables particularly fast and resource-saving communication - ideal for applications with high performance requirements and large amounts of data.

Advantages are bidirectional streaming and automatic code generation for different programming languages. Disadvantages result from its lower prevalence in the industrial environment and more complex troubleshooting compared to text-based interfaces.

GenICam

GenICam is a standard for the uniform control and configuration of industrial cameras, not for direct communication between the vision system and robot controller. It is used throughout the industry in image processing and facilitates the integration of different camera models into vision systems.

As a key industry standard in industrial image processing, GenICam enables direct access to image and depth data. Both GenICam and gRPC are interfaces that are primarily intended for transferring this image data. They are therefore particularly suitable for streaming between the camera and the evaluation system.

In contrast, interfaces such as REST API, OPC UA, or ROS are mainly used to communicate analysis results and control data with the robot controller. They usually only process the pose results from the software modules themselves.

Comparing the most important standardized interfaces

The table provides an overview of the most important properties and application areas of the respective standard interfaces for integrating machine vision into robotics applications in industrial environments.

Interface | Protocol / Technology | Typical Use Cases | Advantages | Disadvantages | Prevalence in Industry |

REST API | HTTP, JSON/XML | General system integration, web applications, robotics | Platform-independent, widely used, easy to implement | No real-time capability, text-based, higher latency | High |

|---|---|---|---|---|---|

ROS | TCP/IP, custom protocols | Research, prototyping, flexible robotic solutions | Large community, many open-source packages, flexible | Limited real-time capability, less suited for production | Medium |

ROS 2 | DDS, UDP, TCP/IP | Industry 4.0, modern robotics, real-time applications | Improved real-time capability, scalable, industry-ready | More complex setup, still developing | Increasing |

OPC UA | TCP/IP, binary, XML | Industrial automation, process communication | Vendor-independent, secure, supports complex data | Higher integration effort, complex specification | High |

gRPC | HTTP/2, Protocol Buffers | High-performance, distributed systems, microservices, robotics | Highly performant, bidirectional streaming, automatic code generation | Less common in industry, more complex debugging | Low to increasing |

GenICam | GenICam standard, XML, various protocols (e.g., GigE Vision, USB3 Vision) | Industrial imaging, camera control, integration into vision systems | Brand-independent camera control, high flexibility, facilitates integration of various cameras | No direct data exchange with robot control, focus on camera configuration and control | Very high in machine vision |

Manufacturer-specific plugins and bridges

URCap for Universal Robots

URCap is a plugin framework specifically developed by Universal Robots to extend the functionality of their robot controllers. It enables the integration of additional functions and peripheral devices, but is designed exclusively for the robot platforms of Universal Robots and does not follow any open, manufacturer-independent standard.

URCap enables plug-and-play installation and easy use of vision systems with Universal Robots. The interface establishes a direct connection between the vision system and the robot controller. Configuration is carried out conveniently via a PolyScope command extension or a web interface. The prerequisite for using URCap is PolyScope version 3.12.0 or higher for CB series robots or version 5.6.0 or higher for E series robots.

URcap | PolyScope-Plugin for Universal RobotsEKIBridge for KUKA

The EKIBridge is an interface based on the KUKA.Ethernet KRL Framework and enables communication between KUKA robot controllers and external systems such as machine vision solutions. Data and commands can be reliably exchanged via Ethernet using the EKIBridge.

For example, service calls, status queries, or the transmission of position data can be implemented directly from the robot program. EKIBridge supports the flexible and application-oriented integration of image processing systems in KUKA robotics applications.

EKIBridge | Software Add-on for KUKAGRI - Generic interface for any robot

Generic Robot Interface (GRI) is a future-proof and versatile interface that is compatible with all robots that support TCP communication. Example codes are available for common robot brands such as ABB, Fanuc and Techman; further examples for Franka Robotics, fruitcorerobotics, and Yaskawa will be available shortly, or are already available upon request.

Compared to the complex HTTP requests of the REST API, GRI relies on lean TCP socket communication. Requests are configured as simple job IDs via the web GUI, and responses contain pick positions in a fixed, static format. The implementation effort remains minimal, as only short code snippets are required on the robot controller.

GRI runs directly on the Basler Stereo visard, so no additional hardware or user-side application is required.

GRI | Generic Robot Interface

Is your robot not listed? We will find a way to support you!

Contact usComparing the most important manufacturer-specific interfaces

The table provides an overview of the most important properties and application areas of the respective robotic interfaces for integrating machine vision into robotic applications in industrial environments.

Interface | Protocol / Technology | Typical Use Cases | Advantages | Disadvantages | Prevalence in Industry |

URCap | Proprietary, PolyScope, TCP/IP | Universal Robots, plug-and-play integration of peripherals | Easy installation, direct connection, intuitive configuration, good documentation | Only for Universal Robots, dependent on PolyScope version | High with Universal Robots |

|---|---|---|---|---|---|

EKI-Bridge | KUKA.Ethernet KRL, TCP/IP | KUKA robots, integration of external systems (e.g., vision) | Flexible data transfer, deep integration, versatile | Only for KUKA, license required, programming skills needed | High with KUKA |

GRI | TCP Socket, proprietary | Robots with TCP support, e.g., ABB, Fanuc, Techman, Yaskawa | Compatible with many brands, minimal scripting effort, no additional hardware, fast implementation | Static response format, less flexible than APIs, limited customizability | Increasing |

Other robot manufacturers and their interfaces

Manufacturer | Interface/Plugin | Vision Integration | PC Communication | Real-Time Capable | Proprietary |

ABB | PC Interface / RAPID Socket | ✅ | ✅ | ❌ | ✅ |

|---|---|---|---|---|---|

Fanuc | Karel / Socket Messaging | ✅ | ✅ | ❌ | ✅ |

Yaskawa | MotoPlus / MotoCOM | ✅ | ✅ | ❌ | ✅ |

Stäubli | VAL3 TCP/IP Interface | ✅ | ✅ | ❌ | ✅ |

Omron Techman | TMflow Plug-ins | ✅ | ✅ | ❌ | ✅ |

Denso | B-CAP / ORiN | ✅ | ✅ | ❌ | ✅ |

KUKA | RSI | ✅ | ✅ | ✅ | ✅ |

Beckhoff | TwinCAT Vision Integration | ✅ | ✅ | ✅ | ✅ |

Direct communication between vision system and robot controller

With this type of integration, data such as pick positions, quality features, or status information can be transmitted without detours to the robot controller and processed directly in the robot program.

By extending the command set or adapting parameters, tasks such as dynamically transferring gripping points, controlling test sequences, or flexibly adapting to changing production conditions can be implemented efficiently. Direct integration ensures short reaction times and high process reliability. It also opens up new possibilities for intelligent automation in robotics.

The command set of the robot controller should be expanded to include specific commandsthat enable seamless interaction with the vision system. These include, among others:

Requesting and receiving pick positions:

The robot can request specific coordinates or gripping points from the vision system and use them for pick-and-place tasks.Transmission of quality or inspection results:

The vision system sends inspection results or status information directly to the robot controller to trigger follow-up actions, such as ejecting faulty parts.Starting and stopping image processing sequences:

The robot can trigger or stop image acquisition or evaluation processes in a targeted manner, depending on the current production step.Synchronization and trigger functions:

Commands for synchronizing robot movement and image acquisition, for example for inline inspection or gripping in motion.

Parameter adjustments affect, for example:

Runtime parameters:

Adjustment of threshold values, tolerances, or filter settings in the vision system to react to different product variants or environmental conditions.Gripping strategies and tool parameters:

Dynamic adjustment of the gripping force, speed, or tool type based on the object data supplied by the vision system.Position and path corrections:

Transferring correction values from image processing for the precise execution of robot movements.

Tips for successful integration

Successfully integrating machine vision into robotics applications requires careful planning and attention to technical details. The following tips will help you overcome typical challenges and implement an efficient, reliable solution:

Select the interface that best suits your robot platform and your application requirements (e.g. REST API, OPC UA, manufacturer-specific plugins).

Check the compatibility of the camera, vision software, and robot controller early on in the project.

Use existing sample codes and templates for fast and error-free integration.

Make sure that the network and communication parameters are configured correctly to ensure stable data transmission.

Allow sufficient time for testing and validation of the overall solution, especially for complex or safety-critical applications.

Document interfaces and processes clearly to facilitate maintenance and extensions.

Machine vision and 3D vision can be successfully integrated into the robot controller if interfaces such as REST API or ROS are used in a targeted manner and all components are optimally coordinated with each other. When selecting the right robot interface, the focus should be on compatibility, real-time capability, and scalability in order to implement precise and flexible automation solutions.

Real-life examples and use cases

Practical examples show how machine vision systems are used in robotics. Typical applications, such as bin picking, pick-and-place, or quality control, illustrate the benefits of combining machine vision and robot technology. The following use cases illustrate how Basler cameras and software solutions enable efficient, flexible, and reliable automation solutions.

Summary

The successful integration of machine vision in robotics applications opens up new possibilities for precise, flexible, and efficient automation solutions. Various interfaces, such as REST API, OPC UA, ROS, gRPC, or manufacturer-specific plugins, enable seamless communication between machine vision systems and robot controllers.

3D vision offers decisive advantages, such as reliable object recognition and dynamic adaptation to complex environments. Real-life examples, such as pick-and-place, bin picking, and quality control, show how efficiently modern vision solutions can be used in robotics.

Selecting the right interface and carefully planning the integration are crucial for the success of the project. This allows image processing and robotics to be optimally combined to meet the requirements of modern production environments.