Traitement d’images 3D

Robots avec perception tridimensionnelle

Les robots qui préhensent les marchandises rapidement et avec précision ont longtemps été difficiles à mettre en œuvre. Mais grâce au traitement moderne des images 3D, les robots peuvent voir dans l’espace et interagir avec les objets presque comme le ferait un humain. Cette technologie révolutionne le traitement de l’image et prend de plus en plus d’importance.

Comment les progrès de la technologie de la vision transforment la robotique

Découvrez le monde de la robotique guidée par l’image. Notre webinaire vous montre quelles technologies de vision 2D et 3D sont idéales pour vos applications. Nous mettons en lumière les tâches typiques de la manutention, de la logistique, du contrôle des processus et de la qualité, etc. Découvrez les critères à prendre en compte lors de la sélection de vos composants de vision.

Regarder le webinaire sur la robotiqueDifférentes procédures et domaines d’application

Grâce au traitement d’images 3D, de toutes nouvelles possibilités s’ouvrent dans les domaines de la robotique, de l’automatisation industrielle et de la logistique. Cette technologie est particulièrement pertinente lorsque les volumes, les formes ou la position et l’orientation 3D des objets doivent être capturés avec précision. Mais quelle est la technologie derrière la génération d’images tridimensionnelles ? Actuellement, quatre méthodes dominent ce domaine : time-of-flight, la triangulation laser, et la lumière.

Vision stéréoscopique et lumière structurée

La vision stéréoscopique fonctionne de la même manière que les yeux humains. Deux caméras 2D prennent des images d’un objet à partir de deux positions différentes et calculent les informations de profondeur 3D en utilisant le principe de la triangulation. Cela peut s’avérer difficile lors de la visualisation de surfaces homogènes et dans de mauvaises conditions d’éclairage, car les données sont souvent trop confuses pour produire des résultats solides. Ce problème peut être résolu avec une lumière structurée pour donner aux images une structure claire et prédéfinie.

Domaines d'application

La vision stéréo offre une large gamme de services, notamment dans la capture des environs avec une grande précision. Cependant, pour obtenir cette précision, des marques de référence supplémentaires, des motifs aléatoires ou des motifs lumineux sont nécessaires. Ceux-ci sont intégrés dans l’image par une source lumineuse structurée. Cette technologie est parfaitement adaptée à la métrologie des coordonnéeset à la mesure 3D des espaces de travail. Il n’est généralement utilisé dans l’environnement de production que lorsqu’une charge de processeur élevée et des coûts système plus élevés sont acceptables.

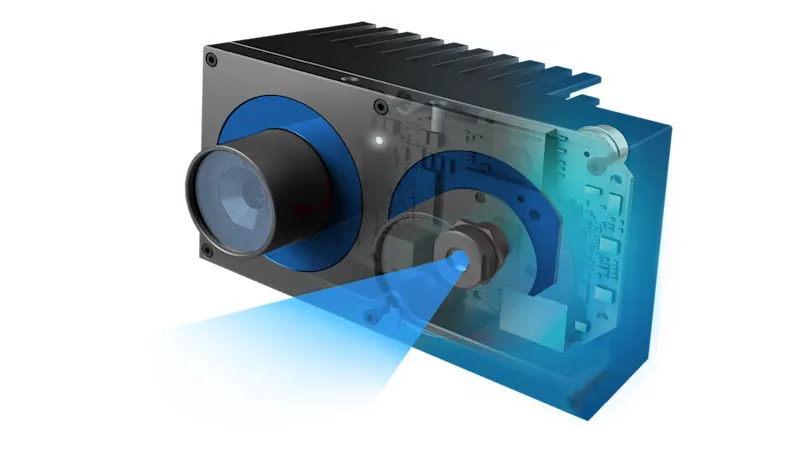

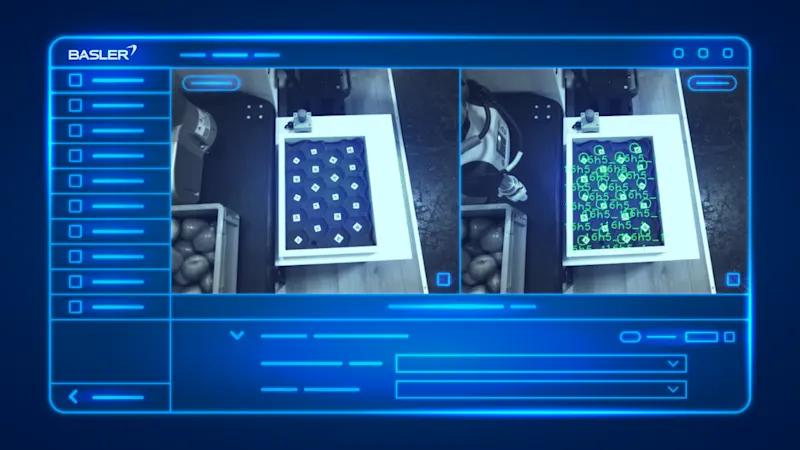

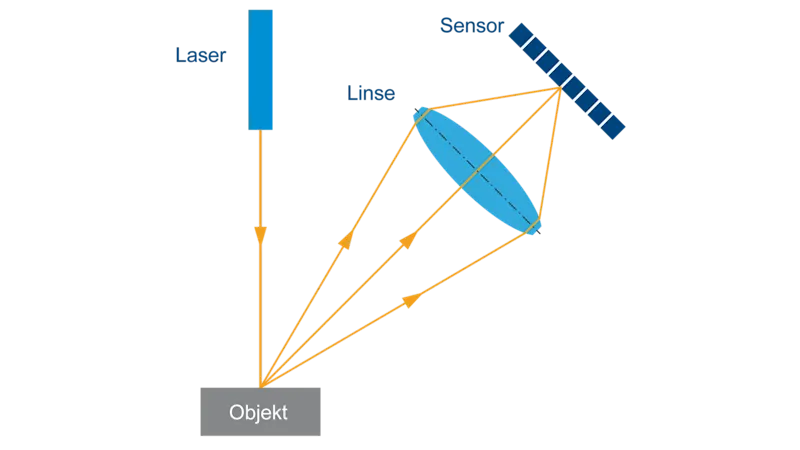

Triangulation laser

La triangulation laser utilise une caméra 2D et une source de lumière laser pour mesurer la distance par rapport aux objets. Le laser projette des lignes sur l’objet, qui sont capturées par la caméra. Si la distance de l’objet change, la position de la ligne laser dans l’image se décale, ce qui permet de calculer la distance.'

Domaines d'application

Grâce à la lumière structurée, la triangulation laser offre des résultats précis dans le traitement d’images 3D, même avec des objets peu contrastés et des conditions d’éclairage difficiles. La méthode est bien adaptée aux applications qui nécessitent une grande précision. La triangulation laser est largement utilisée dans le contrôle, l’arpentage 3D, la fabricationet technologie

Temps de vol (ToF) pour une mesure précise de la profondeur

Le temps de vol (ToF) est une technologie efficace pour la mesure de la profondeur et la détermination des distances. Une caméra ToF fournit à la fois la valeur d’intensité et la valeur de profondeur de chaque pixel, ce qui indique la distance par rapport à l’objet. La méthode ToF peut être utilisée pour générer des nuages de points détaillés en temps réel, tout en créant une image d’intensité et de confiance.

Domaines d'application

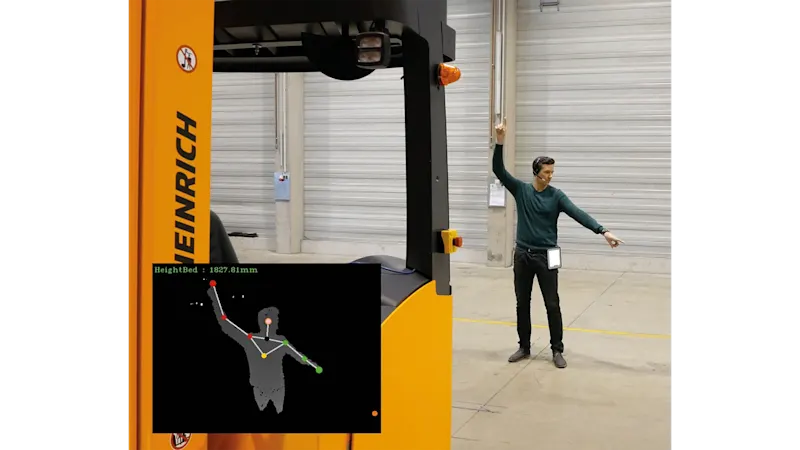

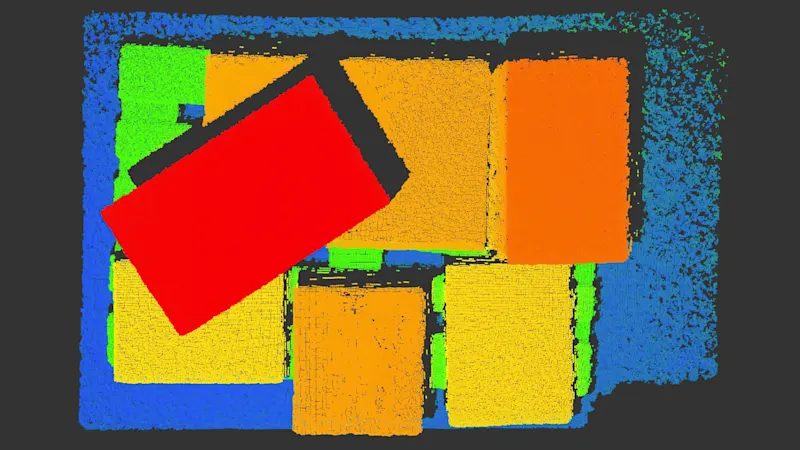

ToF est idéal pour les mesures de volume, tâches de palettisation, les véhicules autonomeset contrôles robotiques dans l’automatisation industrielle. D’autres applications peuvent être trouvées en médecine, telles que la surveillance et positionnement des patients

Quelle technologie convient à mon application ?

Comme pour les caméras 2D, il n’existe pas de solution universelle pour toutes les tâches de traitement d’images 3D. Afin de sélectionner la technologie de caméra 3D optimale, les exigences de l’application spécifique doivent être soigneusement prises en compte.

Facteurs décisifs lors du choix de la technologie 3D

Les questions importantes lors du choix de la bonne technologie sont les suivantes : la position, la forme, l’orientation ou la présence d’objets doivent-elles être détectées ? Quelle précision est nécessaire ? Quel est l’état de surface de l’objet ? De plus, la distance de travail, la vitesse requise, ainsi que le coût et la complexité de la solution souhaitée jouent un rôle décisif. Ces facteurs doivent être mis en balance avec les possibilités de la technologie 3D afin de faire le meilleur choix.

Nos produits pour la robotique guidée par l’image

Choisissez les produits adaptés à votre système de vision parmi notre large gamme. Notre configurateur de système de vision vous aide à assembler votre système rapidement et facilement.